|

|

The NAS App is compatible with Appliance Controller 2.0 and later. To install or update the NAS app, see Manage Applications for instructions about the App Store. |

A NAS cluster provides users the ability to access NAS shares served by any of the cluster's nodes. Through NAS clusters, you can take advantage of additional features built into StorNext NAS, such as NAS failover to ensure that users can always access NAS shares, or DNS load distribution to maintain a desirable level of network response time.

NAS Cluster Configuration Requirements and Considerations

Make sure you can see the nodes that will make up the cluster in the NAS app. See Configure a NAS Node.

In addition, enable a hosted StorNext volume (file system) to maintain configuration information about the NAS cluster. Each node within the NAS cluster must be able to access this file system. See Enable File Locking in the StorNext GUI.

To take advantage of DNS load distribution, you must be running StorNext 6 or later.

Both SMB and NFSv3 protocols are automatically enabled for NAS clusters. For SMB-based environments, all NAS cluster nodes must be running the same version of SMB.

The NAS app does not support configuration for the NFSv4 protocol. NFSv3 is the default, and NFSv4 is not supported with load distribution. If your environment uses NFSv4, and you had enabled NFSv4 for NFS HA using the NAS app or the Appliance Controller Console, you can use the NAS app for management of your NAS environment. However, if NFSv4 is enabled, you can use DNS load distribution for SMB shares only.

You can manually enable or disable NFSv4 on Xcellis Workflow Director server nodes through the Appliance Controller Console. See Disable NFSv4 Services on the Appliance Controller Documentation Center.

The NAS app supports configuration of scale-out NAS clusters. A scale-out NAS cluster provides clients direct access to both SMB and NFSv3 shares from any node within the cluster. In addition, a scale-out NAS cluster manages both health monitoring and failover for the NAS cluster nodes. Of the StorNext NAS cluster configurations, scale-out NAS clusters offer the best possible performance and reliability.

Scale-out NAS clusters can be made up of a combination of the following components:

- Xcellis Workflow Director, G300, and Xcellis Workflow Extender systems

- Appliances running CentOS7 and CentOS6

- StorNext 6.x and StorNext 5.4.x

- Up to 16 NAS cluster nodes

Scale-out NAS clustering on Artico systems can be configured in an active-active failover relationship, but you cannot configure the cluster with more than two Artico server nodes.

You can create one NAS cluster per StorNext Connect workspace (cluster). To configure an additional NAS cluster, you need an additional workspace. See About the Discover Components App. A node that mounts volumes (file systems) from multiple workspaces can only be a part of one NAS cluster.

To create a NAS cluster:

- Click Create NAS cluster on the main page of the NAS app to open the Create NAS cluster page.

- Select the StorNext Connect workspace from which to create the NAS cluster.

- Enter a name to identify the NAS cluster in the app. Valid values are alphanumeric characters and dashes.

- Enter the NAS cluster's master virtual IP (VIP) address, used for managing NAS failover.

Use an address in the same network and subnet used by the NAS cluster and NAS clients. The master VIP will be on the active master node. If the active master node becomes unavailable, the master VIP transfers services to the next available node.

-

Select a volume (file system) accessible to all nodes in the NAS cluster, used to store NAS cluster information. You cannot change the value later.

Note: Make sure you first enable file locking on the volume (file system) in the StorNext GUI. See Enable File Locking in the StorNext GUI.

- Optionally, enter a controller VIP that StorNext Connect can use to manage NAS configuration.

Additional Information

If you do not define the controller VIP or it fails, the master VIP is used. If the master VIP fails, the master node’s IP address is used.

You need to add a controller VIP if your environment has multiple network interfaces, and StorNext Connect is located on a different network from NAS management services and the NAS clients.

- Click Add/Remove nodes to open the node selection table.

- In the table, select the check box(es) of the server node(s) that you want to be part of the NAS cluster.

- Click in the Master column to select the NAS cluster's preferred master node.

Additional Information

When making a NAS cluster of server nodes where shares have been defined, only the master node's shares remain accessible by NAS cluster clients. Shares of non-master nodes are not lost, but they will not be remotely accessible.

When making a NAS cluster of server nodes where the authentication type has been defined, the master node synchronizes its authentication configuration to all other nodes in the cluster.

-

If your environment has multiple network interfaces, for each node, select the NAS IP address for the node. Use an address in the same network and subnet as the NAS cluster's master VIP.

WARNING: The docker port IP address (for example, 172.x.x.x) might appear in the list of available NAS IP addresses. DO NOT select the docker port IP address.

- When you are done selecting the nodes for the NAS cluster, click OK.

- Click Apply to save the configuration and create the NAS cluster.

- The NAS app communicates with the Appliance Controller, showing the steps of the configuration as it completes. Click OK when the configuration is complete to return to the main page of the app.

If the configuration fails, you can review and copy the configuration details for troubleshooting.

What's Next?

The next step is to Configure NAS Authentication.

Click the NAS cluster name hyperlink on the main page of the app to view and edit the NAS cluster's configuration, shares, authentication, and load balancing. You can view and edit the NAS cluster configuration on the Cluster tab.

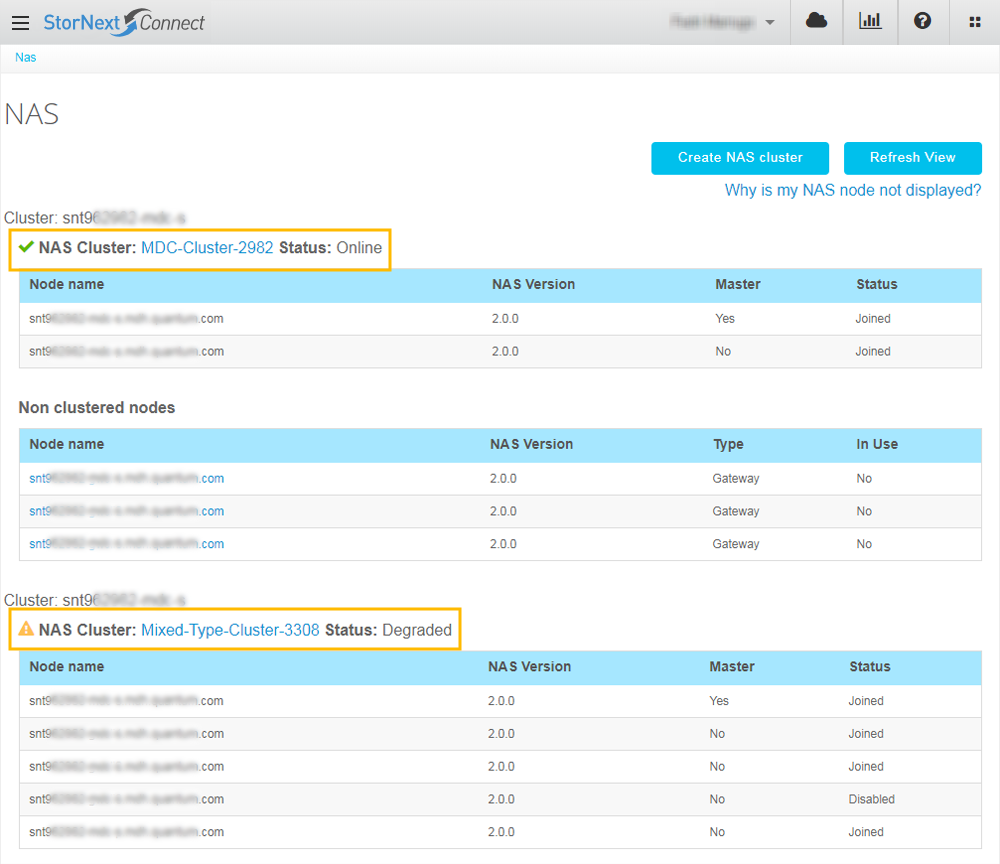

The main page of the NAS app shows the status of the NAS cluster.

| Online = All nodes in the NAS cluster are in a joined state. | Degraded = One or more nodes in the NAS cluster is not in a joined state. |

The Cluster tab of the NAS app shows the states of NAS cluster nodes and allows you to take action on the nodes.

| Current State | State Definition | Master Node | Change to State Options |

|---|---|---|---|

|

Joined |

The node is ready to take client connections, and to become the master node in the event of a NAS failover. |

Yes |

|

|

No |

|

||

|

Enabled |

The node is part of the NAS cluster, but it is not ready to take client connections. |

Yes |

|

|

No |

|

||

|

Not Ready |

The node has left the cluster. Or the Appliance Controller is not able to reach the node. This could be the result of a remote Controller not running, a network issue, or other error with cluster management processes. |

No |

|

|

Disabled |

The node is part of the NAS cluster, but it cannot take client connections nor become the master node in the event of a NAS failover. You can place a node in a disabled state before performing node maintenance, or if the node has gone offline. |

Yes |

|

|

No |

|

- Click the NAS cluster name hyperlink on the main page of the app.

- Click the Cluster tab.

- Click Add nodes.

- Select the check box(es) of the node(s) that you want to add to the NAS cluster.

- Click OK.

- Click Apply.

- The NAS app communicates with the Appliance Controller, showing the steps of the configuration as it completes. Click OK when the configuration is complete to return to the main page of the app.

The server node(s) are joined to the NAS cluster.

If the configuration fails, you can review and copy the configuration details for troubleshooting.

To remove a node from a NAS cluster, delete the NAS cluster and re-create it with the desired nodes. See Delete a NAS Cluster.

If you need to perform maintenance on a node, you can place the node in a disabled state. See Manage Nodes for Maintenance.

You might need to transfer NAS management services to another node if you want to move NAS management services back to the preferred master node or because StorNext File System Management is running on the same node.

Another node in the NAS cluster must be in a joined state to accept NAS management services. If no other node is in a joined state, the master node does not change.

- Click the NAS cluster name hyperlink on the main page of the app.

- Click the Cluster tab.

- In the Change to state column of the master node, select Release master.

- Click Apply.

- The NAS app communicates with the Appliance Controller, showing the steps of the configuration as it completes. Click OK when the configuration is complete to return to the main page of the app.

The next node in the NAS cluster becomes the master.

If the configuration fails, you can review and copy the configuration details for troubleshooting.

Deleting a NAS cluster removes the NAS cluster configuration and return the nodes to a non-clustered state. The configuration information about how SMB or NFS clients can access share data is removed. However, share data remains intact, and clients can access the shares from the node that was master.

- Click the NAS cluster name hyperlink on the main page of the app.

- Click the Cluster tab.

- Click Delete This NAS cluster.

- Click OK to confirm you want to delete the NAS cluster.

- The NAS app communicates with the Appliance Controller, showing the steps of the configuration as it completes. Click OK when the configuration is complete to return to the main page of the app.

The NAS cluster configuration is deleted, and the nodes are returned to a non-clustered state.

If the configuration fails, you can review and copy the configuration details for troubleshooting.

By configuring the Load Balancing tab, you set up the NAS cluster for NAS failover and DNS load distribution.

In the event that the active master node becomes unavailable, NAS failover automatically transfers NAS management services from the active master node to another available node in the NAS cluster. Through this feature, clients have continuous access to NAS shares because NAS management services can be run on any node within the NAS cluster.

DNS load distribution distributes client connections to nodes within a NAS cluster, facilitating greater network bandwidth and better redistribution results after a NAS failover occurs. This feature accomplishes distribution of client connections to NAS cluster nodes by leveraging the DNS forward resolution process, zone delegation, and the NAS VIP pool.

Caution: The NAS app does not support configuration of the NFSv4 protocol. NFSv3 is the default, and NFSv4 is not supported with load distribution. If your environment uses NFSv4, and you had enabled NFSv4 for NFS HA using the NAS app or the Appliance Controller Console, you can use the NAS app for management of your NAS environment. However, you will not be able to use DNS load distribution for NFS shares. If NFSv4 is enabled, you can use DNS load distribution for SMB shares only.

Important

If you use DNS load distribution with NFSv3 clients, lock recovery during failover may not be honored.

Instead, all NFSv3 clients must mount shares through the same predesignated VIP from the VIP pool to ensure safe lock recovery during failover. Do not use the Master VIP as the predesignated VIP.

To configure the Load Balancing tab in the NAS app, you:

- Assign the NAS cluster a hostname.

- Add a NAS cluster VIP pool.

- Enable DNS VIP referral, allowing the NAS cluster to operate an authoritative DNS server for load distribution.

After creating your NAS cluster, you need to assign it a hostname, which is required to enable both scale-out NAS failover and DNS load distribution—NAS clients use the cluster hostname to connect to the NAS cluster, and with DNS load distribution enabled, clients access a share by querying the NAS cluster hostname.

Enter a cluster hostname that is viable within the DNS environment of the NAS cluster and its clients. The NAS cluster's clients must be able to make DNS queries that resolve the cluster hostname, and the DNS environment must be managed such that the cluster hostname can be delegated as a zone by its parent domain. Use the following formats to configure a hostname depending on your environment's OS:

-

mac OS SMB:

smb://<cluster_hostname>/share -

NFS:

<cluster_hostname>:/<export_path_to_share>Enter the directory path to the share for the <export_path_to_share> parameter.

- Windows:

\\<cluster_hostname>\share

A VIP pool is a collection of virtual IP addresses that enables NAS failover and DNS load distribution for scale-out clusters. The VIP pool must consist of—at a minimum—one VIP for each node in your cluster. Keep in mind that the NAS master VIP assigned to the master node is not part of the VIP pool, but instead it is used to access the NAS cluster for maintenance and upgrades, as well as to support the DNS name server.

Before adding a NAS VIP pool, review the following information.

- For NAS clusters using Xcellis Workflow Director servers, you need to configure the nodes and node VIPs on the LAN client network. If you use another network port, the NAS cluster will fail to configure correctly.

- The NAS VIP pool must be set within the same network and subnet used by the NAS cluster and NAS clients—this network is typically the LAN client network.

- Enable global file locking within the StorNext file system before setting the NAS VIP pool for the NAS cluster. See Enable File Locking in the StorNext GUI.

- If you have configured AD or OpenLDAP to authenticate users accessing your NAS cluster, you must add your NAS VIP to the same DNS as your AD or OpenLDAP server. Otherwise, users authenticated through AD or OpenLDAP are unable to access the NAS shares through the NAS cluster.

By leveraging the DNS forward resolution process, zone delegation, and the NAS VIP pool, the StorNext NAS DNS server software can distribute client connections to each node within the NAS cluster. Following is the process:

- Clients query the NAS cluster hostname to access a share.

Hostname Formats

The NAS cluster hostname must be viable within the DNS environment of the NAS cluster and its clients. The NAS cluster's clients must be able to make DNS queries that resolve the cluster hostname, and the DNS environment must be managed such that the cluster hostname can be delegated as a zone by its parent domain. Use the following formats to configure a hostname depending on your environment's OS.

macOS SMB

smb://<cluster_hostname>/share

NFS

<cluster_hostname>:/<export_path_to_share>

Enter the directory path to the share for the <export_path_to_share> parameter.

Windows

\\<cluster_hostname>\share

- The NAS cluster operates an authoritative DNS server. Through the DNS system, zone delegation is performed to enable the NAS cluster's DNS server to return address records for the VIP pool to the clients.

The NAS cluster's DNS server returns all available VIPs for the cluster, shifting the list of available VIPs with each client query. In addition, other DNS servers and DNS resolvers in your network may also be shifting the returned address. This shifting of returned addresses results in a round-robin type of process to ensure client connections are distributed equally between the NAS cluster nodes.

-

The client selects the VIP to which to connect, and mounts the share from the node to which the VIP is currently assigned.

Additional Information

Keep in mind that DNS zone transfer protocols are not used.

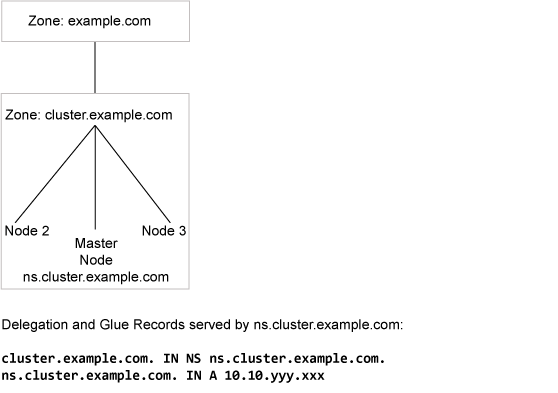

DNS turns memorable hostnames or website names into their numeric IP address counterparts. So when a client requests to communicate with a hostname, such as cluster.example.com, an authoritative DNS server knows the cluster address in the example.com domain and can return the correct numerical IP address to the client.

The domain name system is a hierarchical distributed database system. The organization of this system requires the publishing of resource records, which specify the relationships and operational characteristics of the various zones and servers.

Each individual domain, referred to as a zone, has an authoritative DNS server that serves resource records for the zone. To convey authority over a zone, the parent zone must delegate authority to the zone server by publishing the following zone delegation records:

- The nameserver (NS) record, which consists of the zone name and the nameserver for the zone, gives authority to a nameserver over a specific zone.

- The address (A) record for the nameserver, which resolves the name of the server in the NS record to its IP address.

In a zone delegation, the A record is also referred to as the glue record because it glues the nameserver's IP address to the NS record.

Example

cluster.example.com. IN NS ns.cluster.example.com.;

ns.cluster.example.com. IN A 10.10.xxx.yyy;

Zone Delegation in Action

For DNS load distribution, the NAS cluster acts as a DNS zone, and operates an authoritative DNS server serving its own resource records. So the domain where the NAS cluster's hostname resides must convey authority to the NAS cluster's DNS server for the DNS zone. Using the above example, this delegation of authority happens as follows:

- A NAS cluster with the hostname of

cluster.example.comacts as aclusterDNS zone within theexample.comzone. - The

clusterDNS zone must be given authority overcluster.example.comby theexample.comDNS server. - The

example.comDNS server must publish an NS record indicating the name of the DNS server that will server records forcluster.example.com:cluster.example.com. IN NS ns.cluster.example.com.;

- The NS record advertises that the

ns.cluster.example.com.server is an authoritative DNS server for the delegatedcluster.example.com.zone. - In addition to the NS record, the address record resolves the name of the

ns.cluster.example.com.server to its IP address, 10.10.xxx.yyy.ns.cluster.example.com. IN A 10.10.xxx.yyy;

- Together, the glue record — made up of the NS record and address record — comprise a zone delegation from the

example.comDNS server to thens.cluster.example.com.server at10.10.xxx.yyy.

Important

If you use DNS load distribution with NFSv3 clients, lock recovery during failover may not be honored.

Instead, all NFSv3 clients must mount shares through the same predesignated VIP from the VIP pool to ensure safe lock recovery during failover. Do not use the Master VIP as the predesignated VIP.

- To take advantage of DNS load distribution, you must be running StorNext 6 or later.

- The NAS cluster's DNS server offers distribution of VIP addresses after delegation occurs, without needing outside DNS administration. However, you cannot use the NAS cluster's DNS server as a replacement for your environment's overall DNS system.

- If you want to serve A records independently for the NAS cluster, make sure that each VIP assigned to the VIP pool has its own A record. However, DO NOT include an A record for the NAS cluster VIP. In addition, ensure that the A records change as the VIP pool changes.

- Because all records in DNS are cached, we recommend holding all queries until zone delegation occurs. Otherwise, the DNS server may not be able to locate the address record.

If you choose not to set up the StorNext NAS DNS server (that is, you do not select the DNS VIP referral by NAS cluster check box when configuring the Load Balancing tab), you must determine another way for clients to connect the nodes. Following are a few potential approaches:

- Modify your existing DNS infrastructure with a hostname returning A records for each VIP pool address. Keep in mind that you must coordinate changes to the VIP pool with DNS changes.

- Direct users to a specific NAS cluster node, such as by assigning each client a node based on the first letter of the user's last name, the user's workgroup, or the user's building floor number.

- Modify other host resolution methods to point at specific nodes according to the desired distribution.

To ensure continuous access to shares, clients should always connect to the NAS cluster's master node through the NAS cluster VIP or hostname.

NAS clients access the NAS cluster to mount shares, as follows:

- NAS clients query the NAS cluster's DNS name, which is also the cluster's hostname.

- The NAS cluster's DNS server returns available VIPs from the VIP pool to the client.

- The client selects the VIP to which to connect.

- If the selected node fails, NAS failover services transfers the client connection and associated VIP to a different node.

- When the node comes back online, NAS failover services transfers one or more available VIPs to that node.

If you do not configure the scale-out cluster settings of the Load Balancing tab, the behavior is as follows:

- NFSv3 shares: NAS clients connect directly to the static NAS IP of one of the nodes in the NAS cluster. If the node the client is connected to fails, the client must connect to another node in the NAS cluster to mount the share.

- SMB shares: NAS clients connect to the master VIP. All data routes through the master node, which then distributes the connections across the NAS cluster nodes. If the master node fails, its services fail over to the next node in the cluster.

Failover Interruption to Services

Failover notification is OS or client dependent. If a node in a NAS cluster fails, users connected to the node might experience a momentary interruption to services. This interruption can range from a pause communicating with the remote share to a user needing to reenter authentication credentials to access data residing on the NAS share.

- Click the NAS cluster name hyperlink on the main page of the app.

- Click the Load Balancing tab.

- Enter the DNS hostname for the NAS cluster. You can change, but not delete, the name later. See What Is the NAS Cluster Hostname? for more information.

- For each node in the NAS cluster, add at least one VIP and then click Add VIP.

See What Is the VIP Pool? for more information.

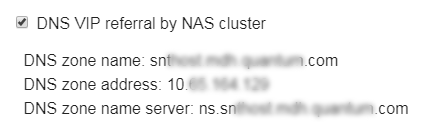

- Select the DNS VIP referral by NAS cluster check box to configure the NAS cluster to operate the StorNext DNS server and manage client distributions. See How Does DNS Load Distribution Work? for more information.

If you do not select the check box, see What Happens If I Don't Enable DNS VIP Referral (DNS Load Distribution)? for more information.

- Click Apply.

- The NAS app communicates with the Appliance Controller, showing the steps of the configuration as it completes. Click OK when the configuration is complete to return to the main page of the app.

If the configuration fails, you can review and copy the configuration details for troubleshooting.

- Work with your IT department to create a zone delegation for the NAS cluster.

Additional Information

Provide the DNS zone name, address, and name server to be added to the DNS configuration. You can obtain this information from the Load Balancing tab after you apply the configuration.

Depending on your environment, refer to one of the following procedures to create a zone delegation.

What's Next?

The next step is to Configure NAS Shares.

Before performing maintenance on a NAS cluster node, you need to disable it—both from the cluster and from SMB or NFS services. After you have completed maintenance, you can re-enable SMB or NFS services on the node and re-join it to the NAS cluster.

Before disabling a node, you must disable SMB or NFS services, as appropriate. Then, before rejoining the node, you need to re-enable its SMB or NFS services. You can perform these actions using the Appliance Controller Console. See Manage NAS Services in the Appliance Controller Documentation Center.

- Click the NAS cluster name hyperlink on the main page of the app.

- Click the Cluster tab.

- In the Change to state column of the node, select Disable node. The node will leave the cluster and then be disabled.

- Click Apply.

- The NAS app communicates with the Appliance Controller, showing the steps of the configuration as it completes. Click OK when the configuration is complete to return to the main page of the app.

If the configuration fails, you can review and copy the configuration details for troubleshooting.

- Click the NAS cluster name hyperlink on the main page of the app.

- Click the Cluster tab.

- In the Change to state column of the node, select Join cluster. The node will be enabled and then join the cluster.

- Click Apply.

- The NAS app communicates with the Appliance Controller, showing the steps of the configuration as it completes. Click OK when the configuration is complete to return to the main page of the app.

If the configuration fails, you can review and copy the configuration details for troubleshooting.