With thin provisioned storage, you may need the ability to unmap space on storage. For example, if the space is over-provisioned and shared by multiple devices, it could be “over-allocated” and writes to the storage fail, even though the storage and corresponding file system claim there is available space. This usually occurs when the actual storage space is completely mapped; the storage maps the space when it is written and it is never unmapped without intervention by the file system or the administrator. When files are removed, the space is not unmapped by StorNext versions prior to StorNext 6.

StorNext 6 provides two file system level mechanisms that unmap free space in the file system

Note: This functionality is available on Linux MDCs and with the QXS series storage. Quantum recommends you not over-provision your StorNext volumes.

Having the array manage the allocation and mapping of the blocks onto the physical storage is commonly known as thin-provisioning. On a new thin-provisioned volume all the blocks (LBAs – Logical Block Addresses) are unmapped and unallocated. Only when an LBA or LBA range is first written, is it allocated and mapped. The allocation and mapping is done in 4 MiByte pages.

Thin-provisioning can offer some advantages:

- If a drive fails and is replaced, the array need only reconstruct mapped LBAs.

- Volumes do not have to be statically carved from underlying RAID groups and can be dynamically expanded.

- Volumes can be over-provisioned.

- Array utilities like volume copy and snapshots can be more efficient.

- Volumes can be dynamically expanded.

Thin-provisioning also has some disadvantages:

- The array mapping mechanism does not know when the file system deletes blocks so an unmap or trim operation has to be done to free mapped/allocated blocks.

- Because the array handles the allocation, it essentially nullifies any optimizations that the StorNext allocator has made.

- All volumes in a storage pool are allocated on a first write basis so a checkerboard allocation among streams is assured.

- Performance is inconsistent.

Beginning with StorNext 6, the cvmkfs command automatically detects if the LUNs in each Stripe Group (SG) are thin provisioned and QXS series devices. This is done for all the SGs so the MDC needs to have access to all of the storage configured in the file system to do the thin provisioned work.

The storage is notified that all of the file system free space is now available on each LUN. So, if the LUN has previously been written to and thereby contained mappings, these are all “unmapped” allowing the storage that was consumed to be available to other devices. The metadata and journal are written to so they are either re-mapped or left mapped during the run of the cvmkfs command.

If the command is invoked with the -e option or the -r option and the file system is not managed, the unmap work is skipped for all stripe groups that can hold user data. The thin provision work is still done for all other stripe groups, for example, metadata only SGs.

The -T option causes the cvmkfs command to skip all thin provision work on all stripegroups. This is useful if the administrator knows all the space is already unmapped and the command is failing since some LUNs are not available.

Each LUN in each SG that is thin provisioned has a pagesize and maximum unmap size. All the LUNs in a SG must have the same sizes for each. If not, the cvmkfs command fails. This failure can be bypassed with the -T option but then all thin provision unmap work is skipped on all SGs.

Note: Do not configure SGs using LUNs with different pagesizes or maximum unmap sizes in the same SG.

Beginning with StorNext 6, the cvfsck command has been supplemented to perform thin provision unmap operations of free space for a given file system. The machine running the command must have access to all of the LUNs in the file system in order to unmap space on them.

This is done by executing the following commands:

cvadmin –e 'stop <fsname>'

cvfsck –U <fsname>

cvadmin –e 'start <fsname>'

This unmaps all free space in the file system.

If the file system has the AllocSessionReservationSize parameter set to non-zero and there are active sessions, any chunks that are reserved for Allocation Sessions, are not unmapped.

To unmap ALL free space including the session chunks, execute the following commands to stop all writes and make sure all data is flushed:

cvadmin –e 'stop <fsname>'

cvadmin –e 'start <fsname>'

/bin/ls <mount point>

sleep 2

cvadmin –e 'stop <fsname>'

cvfsck –U <fsname>

cvadmin –e 'start <fsname>'

Beginning with StorNext 6, after adding a Stripe Group with cvupdatefs or with sgadd, execute the cvfsck –U <fsname> command as indicated in the Unmapping Free Space

section to unmap any existing mapping for that SG as well as all the others.

Administrators can compare the free/allocated space on a given Stripe Group with the amount of unmapped/mapped space on that Stripe Group. To do so, execute the following command:

Note the amount of free/allocated space on a given Stripe Group.

Then, execute the following command on each LUN in that SG and add up all of the unmapped/mapped space for each LUN:

Some space on LUNs in not available to the file system so do not expect exact matches in the totals.

Note:

Mapped space occurs when unmapped blocks are written and that allocated space in the file system may not all be written. This occurs with optimistic allocation and pre-allocation that is not necessarily entirely written. So unmapped space is typically higher than free space when a new file system is written to. As files are removed, the unmapped space will not increase as the free space increases. If the free space is significantly larger than the unmapped space, execute the cvfsck –U command to increase the unmapped space.

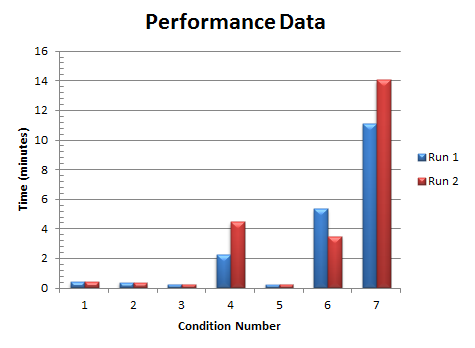

The performance of cvfsck –U <fsname> to unmap thin provisioned LUNs varies significantly. Multiple performance measurements were conducted using cvfsck –U <fsname> under the following seven conditions:

Note: The system environment consisted of a 14 TB file system containing 3 LUNs (each could consume up to 4.7 TBs).

-

After the initial

cvmkfs. - After writing many files and mapping about 8.1 TB.

- After filling the file system.

- After removing ½ the files leaving 7 TB used.

- After re-filling the file system.

- After removing a different set of files …about ½ the files leaving 7.2 TB used.

- After removing all the files.

|

Condition When cvfsck –U Ran |

Run 1 |

Run 2 |

|---|---|---|

|

1 |

0 min 24.8 secs |

0 min 24.7 secs |

|

2 |

0 min 22.4 secs |

0 min 21.8 secs |

|

3 |

0 min 15.3 secs |

0 min 15.5 secs |

|

4 |

2 min 15 secs |

4 min 28 secs |

|

5 |

0 min 15 secs |

0 min 15.5 secs |

|

6 |

5 min 20 secs |

3 min 29 secs |

|

7 |

11 min 7 secs |

14 min 2 secs |

The results indicate the performance of cvfsck –U <fsname> to unmap thin provisioned LUNs varies significantly. Additionally, the unmap operations in the system continue for several seconds, as they continue to run in the background.