Tools > Storage Manager > Distributed Data Mover

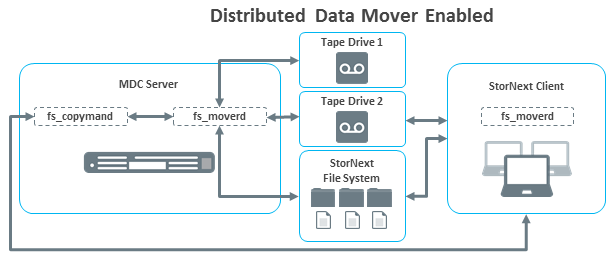

When the Distributed Data Mover (DDM) feature is enabled, data movement operations are distributed to client machines from the metadata controller, which can improve the overall throughput of data movement to archive tiers of storage.

Quantum developed the Distributed Data Mover feature to enhance the data movement scalability of its StorNext Storage Manager software. With this feature the data movement operations are distributed to client machines from the metadata controller, which can improve the overall throughput of data movement to archive tiers of storage.

The data mover process, fs_moverd, runs on the metadata controller (MDC) and clients, allowing up to one group of threads per tape drive or storage disk (SDisk) stream to run concurrently on the MDC and each client.

Note: The DDM feature supports only storage disks on StorNext file systems, not on NFS.

The DDM feature expands data moving flexibility by transferring the mover process to clients that have access to the drives and managed file systems. The actual data moving process remains the same, with the added benefit that the load on the metadata controller is alleviated by moving those processes to clients. The following diagram illustrates the data moving process when the Distributed Data Mover feature is enabled:

| Process | Description |

|---|---|

|

fs_fcopymand |

Manages copy requests routes them to fs_moverd process when copy resources have become available. |

|

fs_fmoverd |

The process that performs multiple copy operations and database updates, either on the MDC or a client. |

Caution: Using the StorNext Distributed Data Mover (DDM) feature can boost overall data movement performance to Storage Manager managed tiers by distributing data movement across multiple systems. By default, StorNext software requires the use of SCSI Persistent Reservations on StorNext metadata controllers and DDM clients. As SCSI persistent reservations control access to shared devices, such as tape, and ensure Storage Manager retains control of the tape device paths, even if a failover were to occur. For additional information, see Tape Devices and Persistent SCSI Reserve.

The Distributed Data Mover feature provides the following benefits:

-

Concurrent utilization of shared StorNext tape and disk tier resources by multiple Distributed Data Mover hosts

-

Scalable data streaming

-

Flexible, centralized configuration of data movement

-

Dynamic Distributed Data Mover host reconfiguration

-

Support for StorNext File System storage disks (SDisks)

-

Works on HA systems without additional configuration

Following are definitions for some terms as they pertain to the Distributed Data Mover feature:

| Terminology | Description |

|---|---|

| Mover |

A process that copies data from one device/file to another. The process can run locally on the metadata controller or on a remote client. See definitions for these terms below. |

| Host |

Any server/client on the SAN. Any host can serve as a location for a mover to run as long as it meets the specifications listed in the Supported Operating Systems section below. |

| Metadata Controller (MDC) |

The server on which the StorNext Storage Manager software is running. (The metadata controller host.) Also known as the local host, or the primary server on HA systems. |

| Remote Client |

A host other than the MDC. |

The Distributed Data Mover feature uses persistent SCSI-3 reservations. All tape devices used with this feature must support the PERSISTENT RESERVE IN/OUT functionality as described in SCSI Primary Commands-3 standard (SPC-3). One implication is that LTO-1 drives cannot be used with the DDM feature.

SCSI-3 persistent reservation commands attempt to prevent unintended access to tape drives that are connected by using a shared-access technology such as Fibre Channel. Access to a tape drive is granted based on the host system that reserved the device. SCSI-3 persistent reservation enables access for multiple nodes to a tape device and simultaneously blocks access for other nodes.

The StorNext Distributed Data Mover feature requires that SCSI-3 persistent reservations are enabled. Refer to parameter FS_SCSI_RESERVE in /usr/adic/TSM/config/fs_sysparm.README to direct the StorNext Manger to use SCSI-3 persistent reservations.

Caution: Using IBM Advanced Path Failover (APFO) requires SCSI Persistent Reservations are disabled, as device reservations are handled by IBM’s software, not StorNext. If you configure a DDM and use the IBM APFO driver, set the "FS_SCSI_RESERVE=multipath;" configuration parameter. To configure the parameter, see

A third-party utility is available to help you determine whether your tape devices are or are not compatible with SCSI-3 persistent reservations. This utility is called sg3_utils, and is available for download from many sites. This package contains low level utilities for devices that use a SCSI command set. The package targets the Linux SCSI subsystem.

You must download and install the sg3_utils package before running the following commands. In the following example, there are two SAN-attached Linux systems (sfx13 and sfx14 in this example) zoned to see a tape drive.

-

Register the reservation keys by running the commands:

[root@sfx13]# sg_persist -n -d /dev/sg81 -o -I -S 0x123456

[root@sfx14]# sg_persist -n -d /dev/sg78 -o -I -S 0xabcdef

-

List the reservation key by running the command:

[root@sfx13]# sg_persist -n -k /dev/sg81 -

Create reservation by running the command:

[root@sfx13]# sg_persist -n -d /dev/sg81 -o -R -T 3 -K 0x123456 -

Read reservation by running the command:

[root@sfx14]# sg_persist -n -d /dev/sg78 -r -

Preempt reservation by running the command:

[root@sfx14]# sg_persist -n -d /dev/sg78 -o -P -T 3 -S 0x123456 -K 0xabcdef -

Release reservation by running the command:

[root@sfx14]# sg_persist -n -d /dev/sg78 -o -L -T 3 -K 0xabcdef -

Delete key by running the commands:

[root@sfx13]# sg_persist -n -d /dev/sg81 -o -C -K 0x123456

[root@sfx14]# sg_persist -n -d /dev/sg78 -o -C -K 0xabcdef

Quantum does not currently support using multiple paths to tape drives. Also, VTL does not support SCSI-3 persistent reservations.

You must install the snfs-mover rpm on each client you want to act as a distributed data mover. Redhat and SUSE mover clients require installing the following Quantum-supplied DDM packages:

-

quantum_curl

-

quantum_openssl

-

snfs-mover

-

quantum_unixODBC

-

snltfs

Note: The packages might require using the --force option.

Follow the installation steps below for each client:

- Log in as

root. - Download the client with DDM package from the MDC.

- Install the .rpm files in the DDM package .tar archive.

For a new client installation, run either the command:

rpm -ivh *.rpmor

rpm -Uvh *.rpmFor a client upgrade, execute the following:

/etc/init.d/cvfs fullstop

rpm -Uhv *.rpm

rpm -Uhv snfs*

service cvfs start

On the Tools menu, click Storage Manager, and then click Distributed Data Mover. The Tools > Storage Manager > Distributed Data Movers page appears.

The DDM page displays any previously configured DDM-enabled hosts, managed file systems, tape drives, storage disks, and Object Storage, as well as the current status:

- Enabled

- Not Configured

- Not Enabled

- Internally Disabled

| Terminology | Description |

|---|---|

| Configured |

Defines a host or device has been added to the list of hosts and devices to be used for DDM operations. DDM does not recognize a host or device until it has been configured. |

| Enabled |

Defines a host or device has been configured and is ready to be used for DDM operations. A host or device cannot be enabled until it is first configured, but a configured host or device may be either enabled or disabled. |

When DDM is enabled, data moving responsibilities are distributed among the hosts you specify as described in Manage DDM Hosts.

- Next to the Distributed Data Mover label, click Enable from the list.

- (Optional) Click Threshold. Use the Threshold option only if you want most data moving operations to run locally on the MDC. When you select the Threshold option, the local host (in other words, the metadata controller) is given a preference over the remote clients. The characteristics of this option are:

- Mover processes will not be assigned to a remote client until a threshold of local movers are already running.

- After reaching the threshold of local running movers, the “all” option is used for allocating new mover requests.

- If not specified, the default value for the threshold is zero. This means if a value is not set for the threshold via fsddmconfig the system will effectively run in “all” mode.

- Click Apply.

When DDM is disabled, data moving responsibilities rest solely on the primary server.

- Next to the Distributed Data Mover label, click Disable from the list.

- Click Apply.

The Distributed Data Mover page enables you to add and configure a new host for DDM, or change settings for a previously configured host. You can also delete a host from the list of DDM-enabled hosts.

Note: When configuring Distributed Data Movers (DDM), the mount path must exactly match the mount path on the MDCs because the GUI assumes DDM clients have the same directory structure as MDCs. DDM does not do this automatically on DDM client sytems; it is a manual process. If a drive, for example, is replaced on a DDM client, the customer must create the directory structure on the new drive on the DDM client to match the MDC mount paths.

- On the Distributed Data Mover page, click New. Fields appear where you can enter host information

- At the Host field, enter the host name you are adding.

-

Enter the remaining fields in the upper portion section of the page:

Parameter Description Enabled

This option determines whether DDM is currently enabled or disabled on the selected host. Check this box to enable DDM, or uncheck to disable.

MDC Mover Threshold

This option is used to set the threshold for when this host is the MDC. This option is valid only when this host is the MDC and the global settings is set to Threshold. This option defines how many mover process will run on the MDC before it starts remote ones. The default is zero. To change the default value, click the box and enter the desired number.

Max Movers (active MDC)

This option defines the maximum number of simultaneous copy requests that the fs_moverd processes is allowed to perform concurrently when it is running as the MDC server.

There are two settings for this option:

- Unlimited means there is no limit to the number of copy request allowed on the host.

- Limited sets a maximum number allowed at one time.

The default value is Unlimited. To configure for unlimited requests, verify that this option is unchecked. The word Unlimited is displayed next to the check box. To set a limit, click check-box. A second box will appear next to the check-box. In this box enter the desired number of copy requests that the fs_moverd process can be running at the same time on the host.

Max Movers (client or standby MDC)

This option defines the maximum number of simultaneous copy requests that the fs_moverd processes is allowed to perform concurrently when it is running as the MDC server.

There are two settings for this option:

- Unlimited means there is no limit to the number of copy request allowed on the host.

- Limited sets a maximum number allowed at one time.

The default value is Unlimited. To configure for unlimited requests, verify that this option is unchecked. The word Unlimited is displayed next to the check box. To set a limit, click check-box. A second box will appear next to the check-box. In this box enter the desired number of copy requests that the fs_moverd process can be running at the same time on the host.

- Under the corresponding headings, select the Managed File Systems, Tape Drives and Storage Disks you want to include in DDM processing.

- To add your selections to the new host, click Apply. To exit without saving, click Cancel. To remain on the page but clear your entries, click Reset.

- When the confirmation message appears, click Yes to proceed or No to abort.

- After a message informs you that your changes were successfully saved, click OK to continue.

- On the Distributed Data Mover page, in the Hosts table, click the host the host you want to edit.

- Click Edit.

- Modify the host configuration as desired.

- If desired, select or remove managed file systems, tape drives and storage disks.

- Click Apply to save your changes.

- When the confirmation message appears, click Yes to proceed or No to abort.

- After a message informs you that your changes were successfully saved, click OK to continue.

-

On the Distributed Data Mover page, in the Hosts table, click the host the host you want to delete.

-

Click Delete to exclude the host from DDM operation. Clicking Delete does not actually delete the host from your system, but rather excludes it from the list of DDM hosts.

-

When the confirmation message appears, click Yes to proceed or No to abort.

-

After a message informs you that the host was successfully deleted, click OK to continue.

When all hosts are chosen, no special preference is given to the local host. Following are the operating characteristics when all hosts are chosen:

- Internally within the StorNext Copy Manager (

fs_fcopymand) there will be a list of hosts to use (that is, the local host and the remote clients). At this time there is no way to specify the order in which hosts appear in the list. - For each host in the list, the following information is tracked:

- The number of movers currently running on the host

- The time of the last assignment

- Whether the host is currently disabled

Note: If a host has fewer drives and all other hosts have multiple drives (for example, two drives versus ten,) the host with the fewer drives will almost always be chosen for operations on those two drives because it is likely to have the fewest running movers.

A Data Movement Report is available from the Reports menu, and displays current configuration information and activity . For more information about the DDM report, see