Configuration > Name Servers

The Name Servers page allows you to manage machines that are acting as File System name servers. You may specify either a hostname or an IP addresses for a machine, but an IP address is preferable because it avoids problems associated with the lookup system, for example, DNS or NIS.

Note: Name servers are also referred to as coordinators. The terms may be used interchangeably throughout this documentation.

Note: On Linux systems, you should turn off the Network Manager service, because it can interfere with the StorNext nameserver and Linux network devices.

Note: If you are connecting Xsan Clients to StorNext MDCs, verify you are meeting the requirements listed in the Requirements section of the Connect an Xsan Client to a StorNext MDC topic.

Note: To configure a host to be a coordinator for a StorNext cluster, you must (at a minimum) install the client package.

Scenario

Your environment consists of the following:

- A StorNext DLC client with a single network interface

- A StorNext appliance with a dedicated private metadata network and an interface with a public IP address

- The client has connectivity to the appliance through the public IP address

The scenario above is supported if your StorNext appliance is running StorNext 6.2 or higher.

Note: The StorNext client can run StorNext 6.x or StorNext 5.x.

The fsnamservers file on the appliance contains the IP addresses of the private metadata network. The fsnameservers file on the clients contains the public IP address of the appliance. This covers the metadata traffic between the clients and the MDCs, which are also configured to be coordinators for the cluster.

The DLC client must have connectivity to a DLC gateway. It is common to have the MDCs to be configured as gateways. It is also common to have additional StorNext nodes configured as gateways. All gateways must have access to the file system disks, usually connected via fibre channel. It is also common that these gateways would have connectivity to the private metadata network for the MDCs. The dpserver file on the gateways is used to configure which addresses are advertised to DLC clients. The gateways could advertise both the private IP address and the public IP address. The client with only access to the public network would connect to the gateway using the public address. The client winds up with a TCP connection to the active FSM and a TCP connection to every gateway. Metadata traffic goes to and from the active FSM. Data traffic is load balanced among all available gateways.

For example:

MDC1 fsnameservers

192.168.100.11

192.168.100.12

MDC2 fsnameservers

192.168.100.11

192.168.100.12

Client fsnameserver

10.65.167.28 (MDC1)

10.65.137.29 (MDC2)

MDC1 dpserver

Interface em2 address 10.65.167.28

MDC2 dpserver

Interface em2 address 10.65.169.116

Note: The DLC client does not require DLC configuration.

Hostnames or, preferably IP addresses, are copied into the StorNext fsnameservers file. This specifies the machines that serve as File System Name Server coordinators, and defines the metadata networks used to reach them. A StorNext host will act as a coordinator if it sees its own IP address in its fsnameservers file. The File System Name Server coordinator is a critical component of the StorNext File System Services (FSS).

Beginning with StorNext 6, metadata traffic is permitted to flow on any interface by which the FSM host node is reachable.

Addresses in the fsnameservers file on the MDC host will be used to determine preferred addresses for reaching the FSM service. If an address in fsnameservers matches the subnet of one of the host MDC's own addresses, it is considered preferred. More than one address can be marked preferred. A StorNext 6 client will use a preferred address to reach the FSM, if possible. It will fall back to other advertised address if an FSM cannot be reached on a preferred address.

Node Status Services

Version 2 (NSS2) clients receive all advertised IP addresses. The cvadmin command shows which addresses are advertised and which of those are preferred.

Only one address is sent to Node Status Service version 1 (NSS1) clients. The StorNext 6 name service selects what it deems to be the best address for this client to reach the FSM for a given file system. It is possible to limit the networks over which metadata traffic flows by configuring a metadata network filter on MDC hosts.

The filter prunes the set of addresses that are advertised to clients. This is particularly helpful when configured addresses are not connected to anything or are connected to networks not reachable by any clients. See the snfs_metadata_network_filter.json man page for details.

When MDCs and coordinators are running StorNext 6.2, it is no longer required that the fsnameservers file be the same for all StorNext clients. For example, a StorNext client may have a single high speed network interface and it may be that this interface is not directly connected to the configured metadata network. This client would configure its fsnameservers file to contain the address of the coordinator that matches the network of its own interface. It is also possible that the client must access the StorNext services via a router because it is not on any directly connected network to the StorNext services. In this case, the client picks the best reachable address for the coordinators and inserts this address into its fsnameservers file. This applies for StorNext 6 clients as well as clients running StorNext prior to SN 6, such as MacOS clients.

StorNext file systems are organized into clusters. Clusters, in turn, belong to an administrative domain. A cluster consists of MDCs and coordinators (the MDCs may also act as coordinators). The fsnameservers file on each coordinator must include the address of the metadata network interface on that coordinator. It is highly recommended that the fsnameservers file be the same on all MDCs and coordinators in the cluster.

MDCs and coordinators are considered to be part of a cluster. A client may or may not consider itself part of a specific cluster since it may be able to access services from more than one cluster. A client's fsnameservers file would then list the IP addresses of the coordinators of all the clusters for which it wants to access file systems. If a client uses the services of one cluster most or all of the time, it can configure the fsmcluster file. This is useful when the cluster it is accessing does not have the default cluster name.

When services start on a client, a message is sent to all IP addresses in the fsnameservers file. The coordinator that sends the first reply for a given cluster becomes the primary coordinator for that cluster for this client. A secondary coordinator is chosen, if available. If access to the primary coordinator is lost, the secondary is promoted to primary and a new secondary is chosen.

A principal function of the coordinator is to manage failover voting in a high-availability (HA) configuration. Therefore, it is critical to select highly reliable systems as coordinators. You can provide redundancy by listing the IP addresses of multiple machines in the fsnameservers file, one entry per line. The first IP address listed defines the path to the primary coordinator. You can then specify a redundant path to this coordinator. Any subsequent IP addresses listed serve as paths to backup coordinators. To create redundancy, Quantum recommends that you select two machines to act as coordinators. Typically, the selected systems are also configured for FSM services, but this is not a requirement.

If the fsnameservers file does not exist, is empty, or contains the localhost IP address (127.0.0.1), the file system operates as a local file system requiring both a client and a server. The file system will not communicate with any other StorNext File System product on the network, thus eliminating sharing the FSS over the SAN.

The addresses in the fsnameservers file define the metadata networks and therefore the addresses used to access file system services. When a heartbeat is sent to a nameserver, the nameserver records the source IP address from the UDP packet and uses that as the address to advertise for FSMs local to that.

If a nameserver receives multiple heartbeats on redundant metadata network interfaces, each metadata network will have a different source address for the same FSM and host. The name server selects only one of the metadata network addresses to use as the address of the FSM that is advertised to all hosts in the cluster. Thus all metadata traffic uses only one of the redundant metadata networks.

If the network being advertised for file system services fails, a backup network is selected for FSM services. However, clients do not necessarily reconnect using the new address. If a client maintains TCP connectivity using the old address, no reconnect is necessary. If the client needs to connect or re-connect, it will use the currently advertised IP address of the file system services.

An NSS version 2 (NSS2) coordinator supports NSS1 and NSS2 clients. The coordinator uses the NSS1 protocol for older NSS1 clients and NSS2 for newer NSS2 clients. The NSS2 client receives a list of IP addresses over which the FSM process is potentially reachable. The client attempts to connect using the preferred addresses, those that appear in the fsnameservers file on the coordinators and MDCs. If the client fails to connect using these addresses, it attempts to connect using the remaining addresses. If it succeeds, it uses the successful address and network for metadata traffic. If no connection can be made to the FSM, the mount fails.

The StorNext GUI supports updating the fsnameservers file on the MDC node or MDC HA pair. You must manually configure the fsnameservers file on client nodes. But when changes are applied through the GUI, the changes are picked up by the StorNext services.

Note: On client nodes, you must restart the StorNext services after changing the fsnameservers file for the change to take effect.

The following NSS2 best practices assume that you are configuring a named cluster environment ;it could be one cluster, or multiple clusters.

Note: If you do not choose to use named clusters, you should not have to modify any configuration after upgrading your clusters to StorNext 6. Features, such as foreign servers will continue to function as they did under previous releases of StorNext.

-

The fsmcluster file should be set up on every MDC and MSS coordinator to specify the default cluster for that node. This can be as simple as:

default_cluster cluster1Note: It is not necessary to specify an administrative domain; the default is _addom0.

-

Entries in the fstab must have the @cluster/addom information added for all file systems not in the default cluster. For example, assume that you have the above setting in fsmcluster making cluster1 the default for this node. If another cluster, cluster2, is available and you would like to mount file systems in that cluster, the entry in fstab would be the following:

snfs2@cluster2 /stornext/snfs2 cvfs diskproxy=client 0 0Then to mount this file system:

mount snfs2@cluster2For file systems in the your default cluster, the fstab entry and the mount command remain as in previous releases. For Windows clients, the cluster information appears with the file system name in the client configuration utility. Simply select the entry and proceed.

Note: With NSS2, it is possible to mount a file system with the same name from two different clusters at the same time.

-

The fsnameservers file should have cluster information added. Support for entries without explicit cluster information is part of the backwards compatibility, so that a properly configured StorNext 5.4.x system can be upgraded to StorNext 6 without needing to make any configuration changes, and continue to run as an isolated cluster.

-

The “<ipaddr> @cluster/addom” (with a space) is for backwards compatibility if the same fsnameservers file used for a StorNext 6 client is to be installed on a StorNext 5.4.x or earlier client.

Otherwise, “<ipaddr>@cluster/addom” (without a space) should be clearer for an all StorNext 6 systems, but a StorNext 5.x system would not parse it properly.

The fsnameservers file should be set up on clients with the addresses of the coordinators of all clusters that are running file systems that the client would like to mount. The following is an example of an fsnameservers file that points at two sets of coordinators for two different clusters.

10.0.40.1@cluster1

10.0.40.2@cluster1

10.0.40.3@cluster2

10.0.40.4@cluster2The MDC node's fsnameservers file can be set up the same as the client. But if the MDC is also acting as a coordinator, it must follow the coordinator rules shown below.

-

In the example above, NSS coordinators should not be configured to be coordinators for multiple clusters, even though the code does support this. A coordinator's fsnameservers file might look like this:

10.0.40.1@cluster1

10.0.40.2@cluster1In the example above, one of the IP addresses in the list would be assigned to an interface on the coordinator node. A coordinator knows it is a coordinator for a cluster if it sees its own address in the fsnameservers file. A second set of coordinator nodes would have the following:

10.0.40.3@cluster2

10.0.40.4@cluster2The following is not allowed because the same IP address is being used twice:

10.0.40.1@cluster1

10.0.40.1@cluster2Coordinators can be configured to see other clusters than the one that they are the coordinator for. For example, the following is acceptable:

/usr/cvfs/config # cat fsnameservers

10.65.179.145@_cluster0

10.65.179.217@_cluster0

10.65.175.175@cluster1

10.65.173.215@cluster1

/usr/cvfs/config #StorNext 6 provides a new cvadmin subcommand to display known coordinators. This may help you properly set up the fsnameservers file:

cvadmin> coord

Cluster: cluster1@_addom0

ID: 0:ffff0a41afaf flags=0x33f RAS G-RAS xFSLIST 63K SLOW_HB RAS_V2 NSS2

STATIC

10.65.175.175

ID: 0:ffff0a41add7 flags=0x33f RAS G-RAS xFSLIST 63K SLOW_HB RAS_V2 NSS2

STATIC

10.65.173.215

Cluster: _cluster0@_addom0

ID: 0:ffff0a41b391 flags=0x33f RAS G-RAS xFSLIST 63K SLOW_HB RAS_V2 NSS2

STATIC

10.65.179.145

ID: 0:ffff0a41b3d9 flags=0x700 NSS2 STATIC LOCAL

10.65.179.217 - The fsmlist file should not contain cluster name information. StorNext 6 does support adding cluster information, but all FSMs running on a given node belong to the same cluster.

- Services, such as file systems, and coordinators must belong to a specific cluster. Client nodes can create an fsmcluster file for convenience, in which their default cluster is specified. Client nodes do not technically belong to that cluster, but have access to services from that cluster.

-

The nss_cctl feature allows you to configure client access rights to administrative tasks, such as starting and stopping file systems. This is implemented by creating the nss_cctl.xml file on the coordinator nodes. It has no meaning on nodes that are not coordinators. There is a separate file for each cluster on which controls are implemented. If the fsmcluster file exists, the plain nss_cctl.xml file applies to the default as specified in that file. If no fsmcluster file exists, the format is nss_cctl.cluster.addom.xml. Quantum recommends you use the FSM cluster file and use nss_cctl.xml.

StorNext 6 (and later) provides the command nss_cctl_template, which creates a nss_cctl.xml file with values for host names and file systems that match what it currently sees in the cluster in which it is being run.

-

The purpose of the cluster names is to use two identifiers to uniquely identify FSMs. Prior to cluster names, the only way for a client to access more than one cluster was by making use of the fsforeignservers file, and all discovered FSM names were kept in a flat namespace. Thus, the FSM names had to be unique across all the clusters. With the addition of cluster names in StorNext 6, FSM names now only need to be unique within a cluster, because the cluster name will disambiguate FSMs.

In the same manner, taking advantage of the administrative domain name allows the same cluster name to be used in different administrative domains. Previously, cluster names had to be unique across administrative domains. In StorNext 6, they only need to be unique within the administrative domain to which they belong.

An example of using multiple administrative domains would be a company that has two StorNext environments: a production environment and a development environment. By giving each environment a unique administrative domain name, you can create cluster names within each domain without worrying about clashing with other domains.

Quantum recommends that when cluster names are configured, that you also configure administrative domain names at the same time, even if all the clusters are under one administrative domain name. Since the fsmcluster file is being configured anyway, explicitly set up both the default cluster name and administrative domain name at the same time. The defaults of _cluster0/_addom0 should only be used when there is no explicit configuration of cluster names being done, such as in the initial upgrade from a StorNext 5.x system.

Caution: If you want to change the default cluster name (_cluster0), see the man page for fsmcluster (available via the CLI, or within the Man Pages Reference Guide available online at the StorNext 6 Documentation Center). You must follow a strict procedure outlined in the man page for fsmcluster. This applies to any change to the cluster name; for example, from the default to a non-default (_cluster0 to _cluster123), or any other derivative (mycluster123 to mycluster456).

When you access this page by choosing Name Servers from the Configuration menu, a list of any previously entered IP addresses appears. A green check mark icon under the Enabled column heading indicates that the server is currently enabled as a name server. A red X icon indicates that the server is not currently enabled.

Note: Skip this process if you are using NSS2.

StorNext allows you to specify the order in which name servers are used.

- On the Configuration menu, click Name Servers. Select a name server and then click Move Up or Move Down until the selected server is in the correct order.

- Enter the IP address of the server that you want to add in the field to the left of the Add button.

- Click Add.

- When the confirmation message appears, click Yes to proceed or No to abort.

-

When the new name server appears in the list of available name servers, you can reposition it in the list by using the Move Up or Move Down buttons.

Caution: If you are using NSS1, adding a name server (and other configuration changes discussed below) changes the fsnameservers and affects all servers and clients on your SAN. All servers and clients MUST have the same name server file in order to ensure correct SAN operation. After the name server file has been updated on the server, the SAN administrator must copy the name server file to ALL connected clients and then restart StorNext File System services on those clients.

If you are using NSS2, all MDCs and coordinators in a cluster must contain the same set of IP addresses. If a coordinator is to be replaced, a new coordinator should be brought online during the transition. For more information on how to upgrade coordinators without disrupting services to the clients, see thefsnameserversman page in the StorNext Man Pages Reference Guide. - Click Apply to accept the changes or click Reset to abort the operation.

Note: Although not required, you might want to disable the name server before deleting it to prevent complications.

- Select the name server you want to delete and then click Delete.

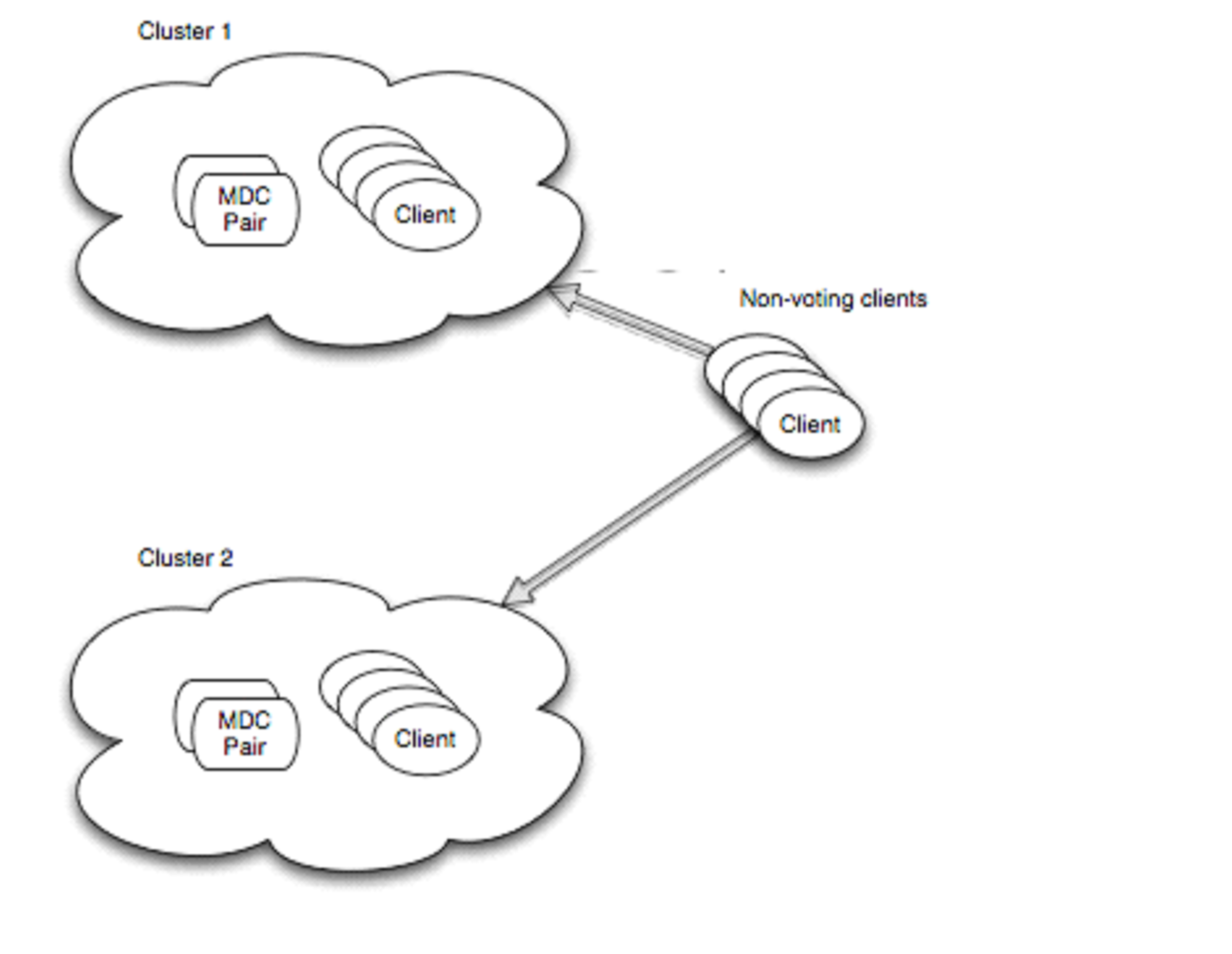

The StorNext name service supports the concept of a foreign server. By using foreign server, StorNext client nodes can mount file systems that are not local to the client's home cluster. Additionally, a client may belong to no StorNext cluster by having an empty or non-existent fsnameservers file.

Having clusters serve foreign clients can help you address some scalability and topology issues that occur in the traditional client model. Depending on your needs, having traditional clients, foreign clients or a mixture may result in the best performance and functionality.

Configuring foreign servers requires creating the fsforeignservers file on client nodes, which is created in the cvfs config directory.

Note: You must edit the fsforeignservers using an ASCII text editor.

Configuring foreign servers allows customers to better scale large numbers of clients. Because foreign clients do not participate in FSM elections, a lot of the complexity and message exchange in the voting process is eliminated. In a typical StorNext HA environment, clients have equal access to both candidates, which makes the choice of active FSM more of a load balancing decision than one of access.

Another benefit of using foreign servers is that certain topology environments prevent all clients from having equal access to all file system s and associated primary storage. By selecting the set of file system services for each client through the foreign servers configuration, the client sees only the relevant set of file systems.

The format for the fsforeignservers file is similar to the fsnameservers file in that it contains a list of IP addresses or hostnames, preferably IP addresses. One difference is that the addresses in the fsforeignservers file are MDC addresses, addresses of hosts that are running the FSM services. This is in contrast to the fsnameservers file, where the name server coordinators specified may or may not also be acting as MDCs.

In HA configurations, you would specify both the active and the standby MDCs that are hosting FSMs for the file system in the fsforeignservers file.

No additional configuration is needed on the MDCs that act as foreign servers. Foreign clients send heartbeat messages to the addresses in the fsforeignservers file. The heartbeat rate is once every 5 seconds. The nodes reply to these messages with a list of local, active FSMs and the addresses by which they may be reached.

After you have created the fsforeignservers file, you can restart services, and mount the file systems available through these services. All the usual requirements of a file system client apply. In addition, the client must have access to the primary storage disks or use the LAN client mount option.

Note: For HA setups, the ha_vip address can be entered in the fsforeignservers file.

NSS2 supports foreign servers, but using cluster configurations may be preferred. The foreign server service uses the NSS1 protocol.

The addition of name server hosts to the configuration will increase the amount of name server traffic on the metadata network. Using a redundant metadata network with multi-homed name servers further increases the load.

Note: This section describes the NSS1 protocol. For NSS2, each heartbeat does not contain all file system information. If nothing has changed, it only assures the client of this. When something changes, only the changes are propagated. This greatly reduces the NSS traffic over the metadata network in NSS2 versus NSS1. Also in NSS1, a heartbeat is sent to all coordinators. Each coordinator replies, but only the first reply is processed. The remaining replies are discarded. With NSS2, a hearbeat is only sent to the client’s primary coordinator. The primary coordinator is selected when services are started, and is the first coordinator in a cluster to have its reply processed by the client.

To help you weigh the benefits versus disadvantages of having multiple name server hosts and redundant meta-data networks, here are some points to consider:

- The

fsnameserversfile must be the same for all MDCs. - Metadata controllers needn’t be name servers.

- Each additional

fsnameserversentry adds additional heartbeats from every file system host. - If multiple metadata networks service an individual file system, each network must have an

fsnameserversinterface. Eachfsnameservershost must have network interface(s) on every metadata network, and each interface must be listed in thefsnameserversfile. - At maximum heartbeat rate, a host sends a heartbeat message to every

fsnameserversentry twice per second. The maximum rate is in effect on a given host when StorNext services are first started, and during transition periods when an FSM is starting or failing over. Thirty seconds after services are started and when a cluster is stable, non-hosts reduce their heartbeat rate to once every 5 seconds. - Each heartbeat results in a heartbeat reply back to the sender.

- The size of the heartbeat and reply message depends on the number of file systems in the cluster.

The following section may help you understand how to calculate computing requirements for name server traffic in a cluster. This example assumes a transition period when all hosts are sending heartbeat messages at twice a second.

- Every host sends a heartbeat packet to every name server address, twice per second. If the host is an , the heartbeat packet contains a list of FSMs running locally.

- Each name server maintains the master list of FSMs in the cluster. The heartbeat reply contains the list of all FSMs in the cluster.

-

The NSS packet is 72 bytes, plus the file system entries. Each file system entry is 24 bytes plus the name of the file system (one byte per character), including a zero byte to terminate the string.

The file system name is always rounded up to the next 8-byte boundary. For example, a file system name of 7 characters or less would be rounded up to 8 bytes, and a file system name with 8-15 characters would be rounded up to 16 bytes. If there is room in the packet, a list of file systems which are mounted, or could be mounted, is also included.

- The heartbeat message size from non- clients is small because there are no locally running FSMs. The heartbeat reply message size is significant because it contains file system locations for all FSMs in the cluster.

- The maximum name server packet size is 63KB (64512). This allows up to 1611 FSMs with names of 7 characters or less. With file system names of 8-15 characters, the maximum packet can hold entries for 1342 FSMs. In configurations where the maximum packet size is reached, each host would receive 129024 bytes per second from each address in the fsnameservers file. This is roughly 1MBit per second per host/address. In a configuration with dual multi-homed name servers, there would be 4 addresses in the

fsnameserversfile. Each host would then receive 4 Mbits per second of heartbeat reply data at the maximum heartbeat rate (twice a second). -

A large cluster with 500 hosts, 1600 FSMs and 4

fsnameserversaddresses would produce an aggregate of about 500*4 or 2000 Mbits or 2 Gbits of heartbeat reply messages per second. If the 4fsnameserversaddresses belonged to two nameservers, each server would be generating 1 Gbit of heartbeat reply messages per second.Note: During stable periods, the heartbeat rate for non- hosts decreases to one tenth of this rate, reducing the heartbeat reply rate by an equivalent factor.

- The metadata network carries more than just name server traffic. All metadata operations such as open, allocate space, and so on use the metadata network. File system data is often carried on the metadata network when LAN clients and servers are configured. Network capacity must include all uses of these networks.